Setup a Fully Private Amazon EKS on AWS Fargate Cluster

Regulated industries needs to host their workloads in most secure ways and fully private EKS on Fargate cluster attempts to solve this problem. Kubernetes is an open-source system for automating the deployment, scaling, and management of containerized applications. AWS Fargate is a technology that provides on-demand, right-sized compute capacity for containers. Each pod running on Fargate has its own isolation boundary and does not share the underlying kernel, CPU resources, memory resources, or elastic network interface with another pod, which makes it secure from compliance point of view. With AWS Fargate, you no longer have to provision, configure, or scale groups of virtual machines to run containers. This removes the need to choose server types, decide when to scale your node groups, or optimise cluster packing.

Source code for this post is hosted at : https://github.com/k8s-dev/private-eks-fargate

Constraints

- No internet connectivity to Fargate cluster, no public subnets, except for bastion host

- Fully private access for Amazon EKS cluster’s Kubernetes API server endpoint

- All AWS services communicates to this cluster using VPC or Gateway endpoints, essentially using private AWS access

A VPC endpoint enables private connections between your VPC and supported AWS services. Traffic between VPC and the other AWS service does not leave the Amazon network. So this solution does not require an internet gateway or a NAT device except for bastion host subnet.

Pre-Requisite

- At least 2 private subnets in VPC because pods running on Fargate are only supported on private subnets

- A Bastion host in a public subnet in the same VPC to connect to EKS Fargate Cluster via kubectl

- AWS cli installed and configured with and default region on this bastion host

- VPC endpoints and Gateway endpoint for AWS Services that your cluster uses

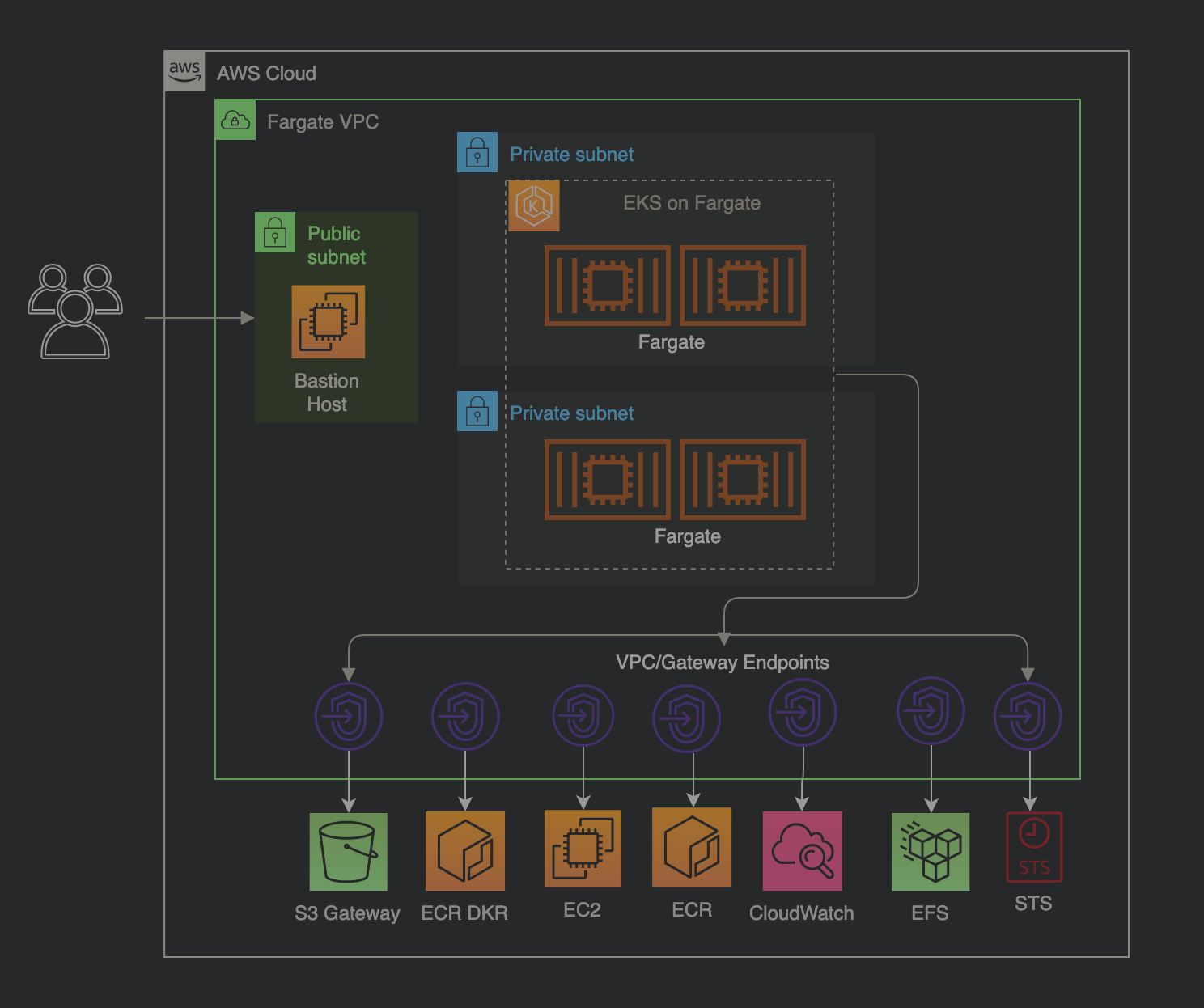

Design Diagram

This diagram shows high level design for the implementation. EKS on Fargate cluster spans 2 private subnets and a bastion host is provisioned in public subnet with internet connectivity. All communication to EKS cluster will be initiated from this bastion host. EKS cluster is fully private and communicates to various AWS services via VPC and Gateway endpoints.

Architecture diagram

Implementation Steps

Fargate pod execution role

Create a Fargate pod execution role which allows Fargate infrastructure to make calls to AWS APIs on your behalf to do things like pull container images from Amazon ECR or route logs to other AWS services. Follow : https://docs.aws.amazon.com/eks/latest/userguide/fargate-getting-started.html#fargate-sg-pod-execution-role

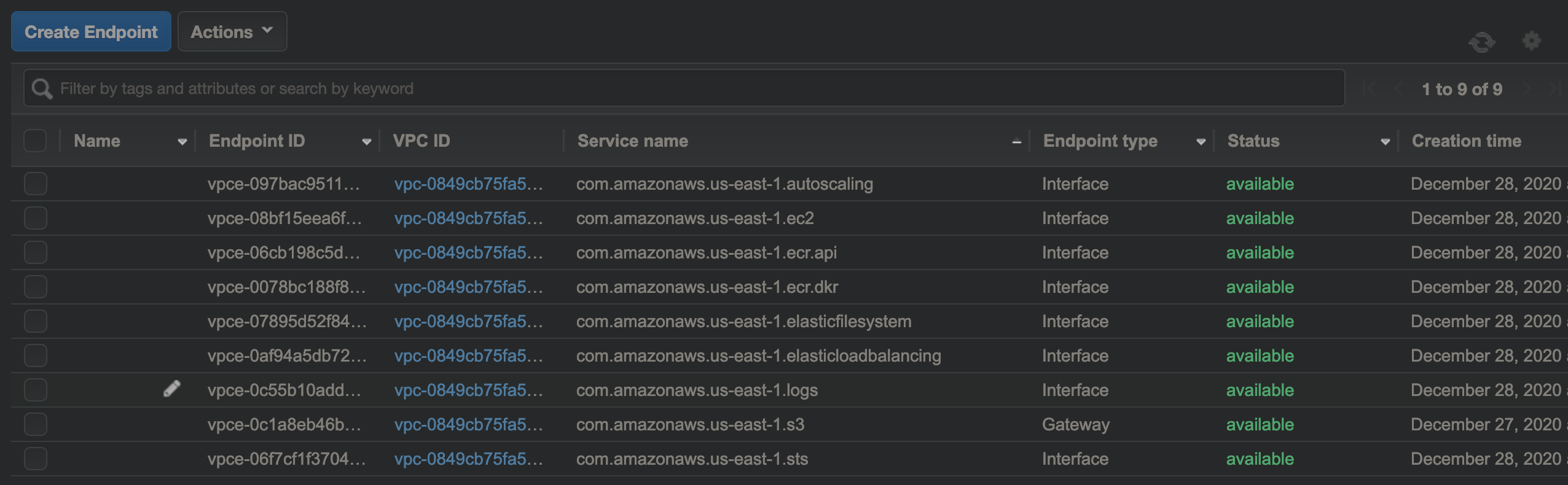

Create AWS Services VPC Endpoints

Since we are rolling out fully private EKS on Fargate cluster, it should have private only access to various AWS Services such as to ECR, CloudWatch, loadbalancer, S3 etc.

This step is essential to perform so that pods running on Fargate cluster can pull container images, push logs to CloudWatch and interact with loadbalancer.

See the entire list here of endpoints that your cluster may use: https://docs.aws.amazon.com/eks/latest/userguide/private-clusters.html#vpc-endpoints-private-clusters

Setup the VPC endpoint and Gateway endpoints in the same VPC for the services that you plan to use in your EKS on Fargate cluster by following steps at : https://docs.aws.amazon.com/vpc/latest/userguide/vpce-interface.html#create-interface-endpoint

We need to provision the following endpoints at the minimum:

- Interface endpoints for ECR (both ecr.api and ecr.dkr) to pull container images

- A gateway endpoint for S3 to pull the actual image layers

- An interface endpoint for EC2

- An interface endpoint for STS to support Fargate and IAM Roles for Services Accounts

- An interface endpoint for CloudWatch logging (logs) if CloudWatch logging is required

Interface Endpoints

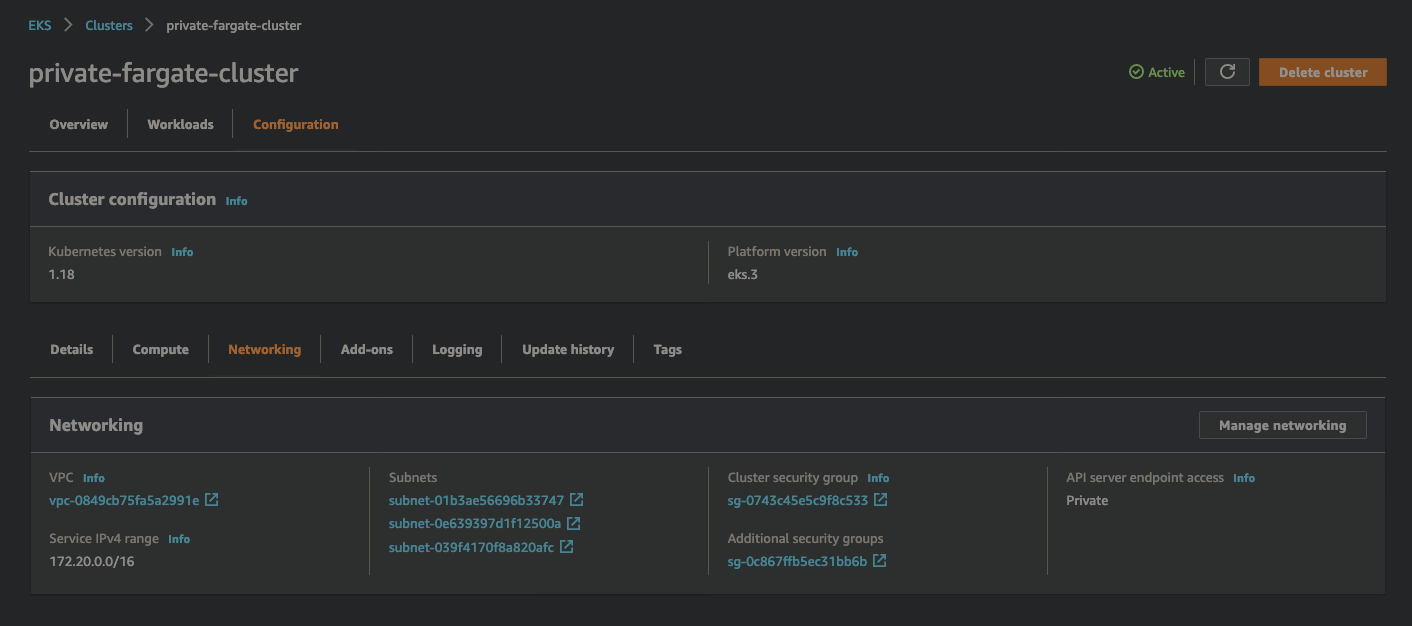

Create Cluster with Private API-Server Endpoint

Use aws cli to create EKS cluster in the designated VPC. Modify with the actual cluster name, kubernetes version, pod execution role arn, private subnet names and security group name before you run the command. Please notice that this might take 10-15 minutes to get the cluster in Ready state.

aws eks create-cluster --name <private-fargate-cluster> --kubernetes-version 1.18 --role-arn arn:aws:iam::1234567890:role/private-fargate-pod-execution-role

--resources-vpc-config subnetIds=<subnet-01b3ae56696b33747,subnet-0e639397d1f12500a,subnet-039f4170f8a820afc>,securityGroupIds=<sg-0c867ffb5ec31bb6b>,endpointPublicAccess=false,endpointPrivateAccess=true

--logging '{"clusterLogging":[{"types":["api","audit","authenticator","controllerManager","scheduler"],"enabled":true}]}

Above command creates a private EKS cluster with private endpoints and enables logging for Kubernetes control plane components such as for apiserver, scheduler etc. Tweak as per your compliance needs.

Connection to this private cluster could be achieved in 3 ways, via bastion host, Cloud9 or connected network as listed here at : https://docs.aws.amazon.com/eks/latest/userguide/cluster-endpoint.html#private-access . For the ease of doing it, we will go ahead with EC2 Bastion host approach.

While you are waiting for the cluster to be in ready state, let’s set up the Bastion host. This is needed because the EKS on Fargate cluster is in private subnets only, without internet connectivity and the API server access is set to private only. Bastion host will be created in public subnet in the same VPC and will be used to access/reach EKS on Fargate cluster. Only requisite is to install kubectl and aws cli on it. Kubectl can be downloaded from here and aws cli v2 could be downloaded from here

All subsequent commands could be run from Bastion host.

Setup access to EKS on Fargate Cluster

kubectl on bastion host needs to talk to api-server in order to communicate to EKS on Fargate cluster. Perform the following steps in order to setup this communication. Make sure to have aws cli configuration done prior to running this command.

aws eks --region <region-code> update-kubeconfig --name <cluster_name>

This saves the kubeconfig file to ~/.kube/config path which enables running kubectl commands to EKS cluster.

Enable logging(optional)

Logging could be very useful to debug the issues while rolling out the application. Setup the logging in the EKS Fargate cluster via : https://docs.aws.amazon.com/eks/latest/userguide/fargate-logging.html

Create Fargate Profile

In order to schedule pods running on Fargate in your cluster, you must define a Fargate profile that specifies which pods should use Fargate when they are launched. Fargate profiles are immutable by nature. A profile is essentially combination of namespace and optionally labels. Pods that match a selector (by matching a namespace for the selector and all of the labels specified in the selector) are scheduled on Fargate.

aws eks create-fargate-profile --fargate-profile-name fargate-custom-profile \

--cluster-name private-fargate-cluster --pod-execution-role-arn \

arn:aws:iam::01234567890:role/private-fargate-pod-execution-role \

--subnets subnet-01b3ae56696b33747 subnet-0e639397d1f12500a \

subnet-039f4170f8a820afc --selectors namespace=custom-space

This command creates a Fargate profile in the cluster and tells EKS to schedule pods in namespace ‘custom-space’ to Fargate. However, we need to make few changes to our cluster, before we are able to run applications on it.

Private EKS on Fargate Cluster

Update CoreDNS

CoreDNS is configured to run on Amazon EC2 infrastructure on Amazon EKS clusters. Since in our cluster we do not have EC2 nodes, we need to update the CoreDNS deployment to remove the eks.amazonaws.com/compute-type : ec2 annotation. And create a Fargate profile so that CoreDNS pods can use it to run. Update the following Fargate profile JSON for your own cluster name, account name, role arn and save in a local file.

{

"fargateProfileName": "coredns",

"clusterName": "private-fargate-cluster",

"podExecutionRoleArn": "arn:aws:iam::1234567890:role/private-fargate-pod-execution-role",

"subnets": [

"subnet-01b3ae56696b33747",

"subnet-0e639397d1f12500a",

"subnet-039f4170f8a820afc"

],

"selectors": [

{

"namespace": "kube-system",

"labels": {

"k8s-app": "kube-dns"

}

}

]

}

Apply this JSON with the following command

aws eks create-fargate-profile --cli-input-json file://updated-coredns.json

Next step is to remove the annotation from CoreDNS pods, allowing them to be scheduled on Fargate infrastructure:

kubectl patch deployment coredns -n kube-system --type json \

-p='[{"op": "remove", "path": "/spec/template/metadata/annotations/eks.amazonaws.com~1compute-type"}]'

Final step to make coreDNS function properly is to recreate these pods, we will use rollout deployment for this:

kubectl rollout restart -n kube-system deployment.apps/coredns

Make sure to double check after a while that coreDNS pods are in running state in kube-system namespace before proceeding further.

After these steps EKS on Fargate private cluster is up and running. Because this cluster does not have internet connectivity, pods scheduled on this can not pull container images from public registry like dockerhub etc. Solution to this is to setup ECR repo, host private images in it and refer to these images in pod manifests.

Setup ECR registry

This involves creating ECR repository, pulling image locally, tagging it appropriately and pushing to ECR registry. A container image could be copied to ECR from bastion host and can be accessed by EKS on Fargate via ECR VPC endpoint.

aws ecr create-repository --repository-name nginx

docker pull nginx:latest

docker tag nginx <1234567890>.dkr.ecr.<region-code>.amazonaws.com/nginx:v1

aws ecr get-login-password --region <region-code> | docker login --username AWS --password-stdin <1234567890>.dkr.ecr.<region-code>.amazonaws.com

docker push <1234567890>.dkr.ecr.<region-code>.amazonaws.com/nginx:v1

Run Application in EKS on Fargate Cluster

Now that we have pushed nginx image to ECR, we can reference it to the deployment yaml.

apiVersion: apps/v1

kind: Deployment

metadata:

name: sample-app

namespace: custom-space

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: <124567890>.dkr.ecr.<region-code>.amazonaws.com/nginx:v1

ports:

- containerPort: 80

Cross-check to ensure that nginx application is in running state after a while.

kubectl get pods -n custom-space

NAME READY STATUS RESTARTS AGE

sample-app-578d67447d-fw6kp 1/1 Running 0 8m52s

sample-app-578d67447d-r68v2 1/1 Running 0 8m52s

sample-app-578d67447d-vbbfr 1/1 Running 0 8m52s

Describe the deployment should look like this.

kubectl describe deployment -n custom-space

Name: sample-app

Namespace: custom-space

CreationTimestamp: Tue, 19 Dec 2020 23:43:04 +0000

Labels: <none>

Annotations: deployment.kubernetes.io/revision: 1

Selector: app=nginx

Replicas: 3 desired | 3 updated | 3 total | 3 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=nginx

Containers:

nginx:

Image: 1234567890.dkr.ecr.<region-code>.amazonaws.com/nginx:v1

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets: <none>

NewReplicaSet: sample-app-578d67447d (3/3 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 10m deployment-controller Scaled up replica set sample-app-578d67447d to 3

Ensuring that nginx application is actually running on Fargate infrastructure, check the EC2 console page. You would not find any EC2 running there as part of EKS cluster, as the underlying infrastructure is fully managed by AWS.

kubectl get nodes

NAME STATUS ROLES AGE VERSION

fargate-ip-10-172-36-16.ec2.internal Ready <none> 12m v1.18.8-eks-7c9bda

fargate-ip-10-172-10-28.ec2.internal Ready <none> 12m v1.18.8-eks-7c9bda

fargate-ip-10-172-62-216.ec2.internal Ready <none> 12m v1.18.8-eks-7c9bda

Another experiment to try out is to create a new deployment with image which is not part of ECR and see that pod will be in crashbackoff state because being a private cluster it can not reach dockerhub on public internet to pull the image.

Closing

- In this experimentation - we created a private EKS on Fargate Cluster, with private API endpoints.

- This deployment solves some of the compliance challenges faced by BFSI and other regulated sectors.

Please let know if you face challenges replicating this in your own environment. PRs to improve this documentation is welcome. Happy Learning!